|

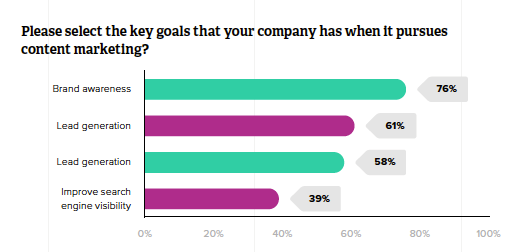

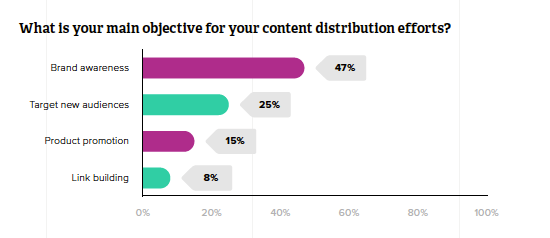

Zazzle Media has released their annual State of Content Marketing 2019 survey, which found that less than one in ten marketers (9%) will be focusing on Digital PR in 2019. Despite this, over three quarters (76%) state that brand awareness is a key performance indicator for them.

Not only this, but 25% of content marketers will be ceasing to participate in offline PR activity as it has been perceived as an ineffective channel for them over the recent years. It seems there is an apparent disconnect between marketers’ desired goals and the tactics they need to carry out to achieve these. So why are marketers seemingly less concerned about off-page distribution, and why should you make a case for Digital PR to hold a key position in your marketing activities? Brand awarenessWhilst the creation of written blog content will appeal to people on the site, we need a mechanism that is going to drive these people towards the site first. Digital PR can help users find your site in a more organic way rather than in a targeted advertorial manner.

The survey found that a quarter of marketers want to target new audiences through content distribution, but without Digital PR this will prove to be a difficult task. Brand protectionPR allows you to control narratives and get involved with industry conversations which you would otherwise be unable to participate in. The digital aspect also allows you to receive real-time coverage updates which mention your brand’s name and put out an immediate response in an attempt to stem or enhance any positive or negative feedback. Protecting your brand, especially in the SERPs, is a powerful tool for PRs. Read next: Organic reputation management & brand protection Link buildingA major perk of creating Digital PR campaigns is that they usually come with linkable assets that have a chance of being cited within media coverage. Link building is an activity which has a reputation of relying on black-hat tactics for success, paying for links, directories, and the others. Digital PR allows you to avoid all these techniques and the risks associated with them and build some legitimate links from high authority publications. Read next: Five proven content formats to maximize link acquisition with digital PR. Managing director of Zazzle Media, Simon Penson, commented on the statistics:

What do you think of these findings? Let us know your thoughts on the results in the comments. Kirsty Daniel is a Digital Marketing Executive at Zazzle Media. She can be found on Twitter @kirsty_daniel. The post Survey: Less than 10% of marketers to focus on Digital PR in 2019 appeared first on Search Engine Watch. from https://searchenginewatch.com/2019/04/12/digital-pr-2019/

0 Comments

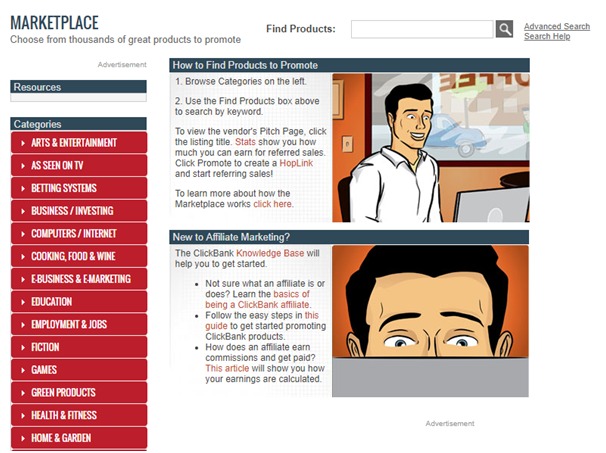

In all my years as an SEO consultant, I can’t begin to count the number of times I saw clients who were struggling to make both SEO and affiliate marketing work for them. When their site rankings dropped, they immediately started blaming it on the affiliate links. Yet what they really needed to do was review their search marketing efforts and make them align with their affiliate marketing efforts. Both SEO and affiliate marketing have the same goal of driving relevant, high-quality traffic to a site so that those visits eventually turn into sales. So there’s absolutely no reason for them to compete against each other. Instead, they should work together in perfect balance so that the site generates more revenue. SEO done right can prove to be the biggest boon for your affiliate marketing efforts. It’s crucial that you take a strategic approach to align these two efforts. Four ways to balance your affiliate marketing and SEO efforts1. Find a niche that’s profitable for youOne of the reasons why affiliate marketing may clash with SEO is because you’re trying to sell too many different things from different product categories. So it’s extremely challenging to align your SEO efforts with your affiliate marketing because it’s all over the place. This means that you’ll have a harder time driving a targeted audience to your website. While your search rankings may be high for a certain product keyword, you may be struggling to attract visitors and customers for other products. Instead of trying to promote everything and anything, pick one or two profitable niches to focus on. This is where it gets tricky. While you may naturally want to focus on niches in which you have a high level of interest and knowledge, they may not always be profitable. So I suggest you conduct some research about the profitability of potential niches. To conduct research, you can check resources that list the most profitable affiliate programs. You can also use platforms like ClickBank to conduct this research. While you can use other affiliate platforms for your research, this is a great place to start. First, click on the “Affiliate Marketplace” button at the top of the ClickBank homepage.

You’ll see a page that gives you the option to search for products. On your left, you can see the various affiliate product categories available. Click on any of the categories that pique your interest.

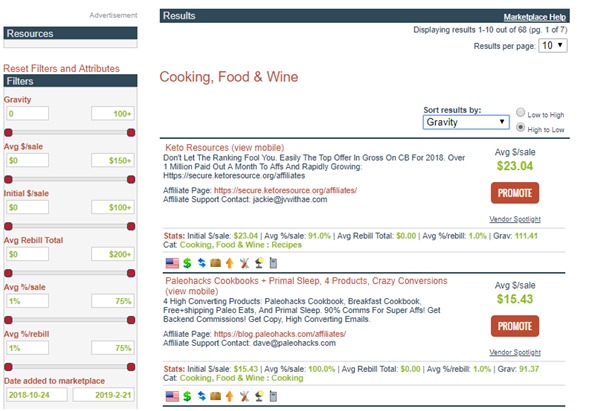

On the search results page, you’ll see some of the affiliate marketing programs available on the platform. The page also displays various details about the program including the average earning per sale. Then filter the search results by “Gravity,” which is a metric that measures how well a product sells in that niche.

You should ideally look for products with a Gravity score of 50 or higher. Compare the top Gravity scores of each category to see which is the most profitable. You can additionally compare the average earnings per sale for products in different categories. 2. Revise your keyword strategySince you’re already familiar with search marketing, I don’t need to tell you about the importance of keyword planning. That being said, I would recommend that you revise your existing keyword strategy after you’ve decided on a niche to focus on and the products you want to sell. The same keyword selection rules apply even in this process. You would want to work with keywords that have a significant search volume yet aren’t too competitive. And you will need to focus on long-tail keywords for more accuracy. While you should still use the Google Keyword Planner, I suggest you try out other tools as well for fresh keyword ideas. Among the free tools, Google Trends is an excellent option. It gives you a clear look at the changes in interest for your chosen search term. You can filter the result by category, time frame, and region. It also gives you a breakdown of how the interest changes according to the sub-region.

The best part about this tool is that if you scroll down, you can also see some of the related queries. This will give you insights into some of the other terms related to your original search term with rising popularity. So you can get some quick ideas for trending and relevant keywords to target.

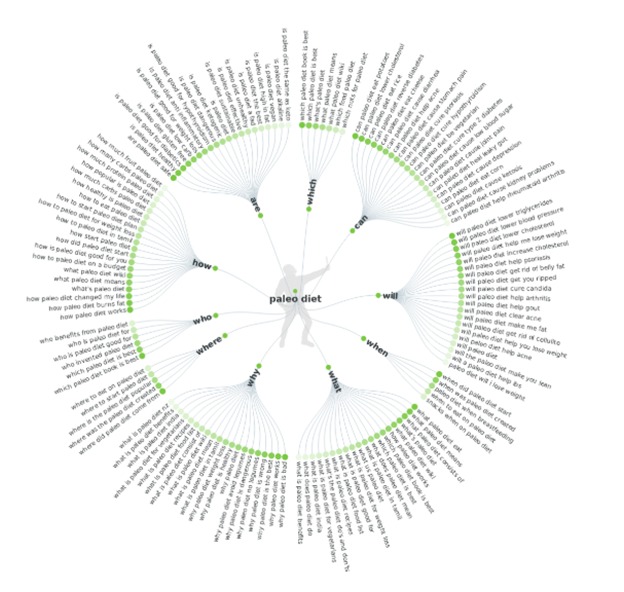

AnswerThePublic is another great tool for discovering long-tail keyword ideas. This tool gives you insights into some of the popular search queries related to your search term. So you’ll be able to come up with ideas for keywords to target as well as topic ideas for fresh content.

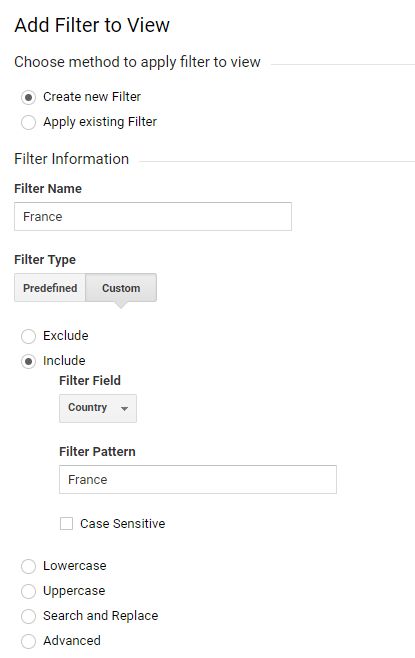

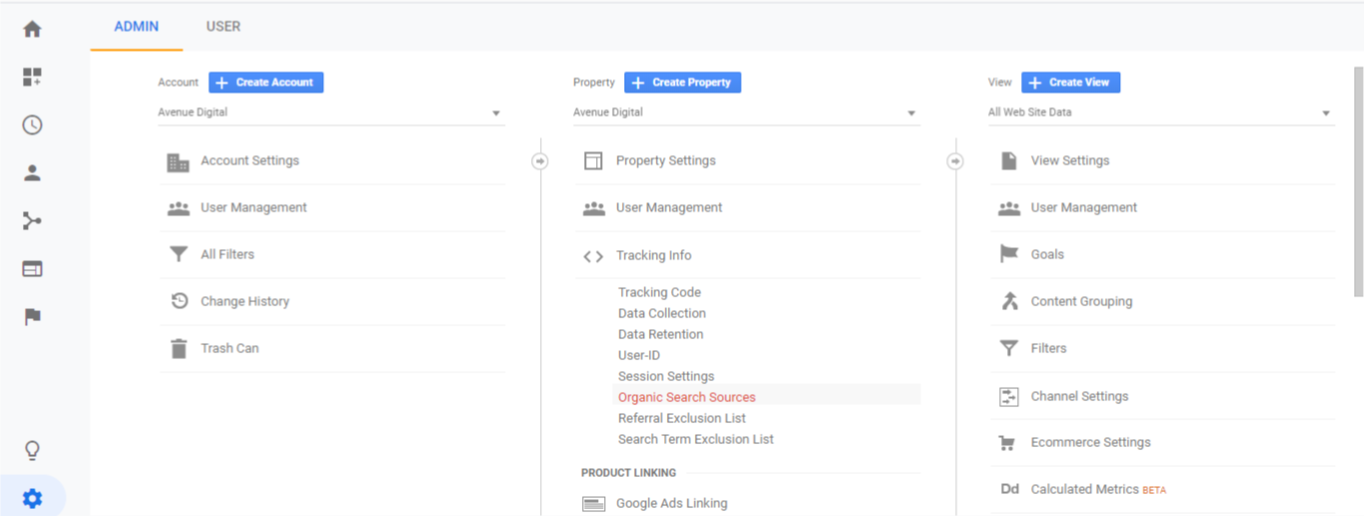

3. Optimize your website contentHigh-quality content is the essence of a successful SEO strategy. It also serves the purpose of educating and converting visitors for affiliate websites. So it’s only natural that you will need to optimize the content on your website. You can either create fresh content or update your existing content, or you can do both. Use your shortlisted keywords to come up with content ideas. These keywords have a high search volume, so you know that people are searching for content related to them. So when you create content optimized with those keywords, you’ll gain some visibility in their search results. And since you’re providing them with the content they need, you will be driving them to your site. You can also update your existing content with new and relevant keywords. Perhaps to add more value, you can even include new information such as tips, stats, updates, and more. Whatever you decide to do, make sure the content is useful for your visitors. It shouldn’t be too promotional but instead, it needs to be informative. 4. Build links to boost site authority and attract high-quality trafficYou already know that building high-quality backlinks can improve the authority of your site and therefore, your search rankings. So try to align your link-building efforts with your affiliate marketing by earning backlinks from sites that are relevant to the products you’re promoting. Of course, you can generate more social signals by trying to drive more content shares. But those efforts aren’t always enough. Especially if you want to drive more revenue. I suggest you try out guest posting, as it can help you tap into the established audience of a relevant, authoritative site. This helps you drive high-quality traffic to your site. It also boosts your page and domain authority since you’re getting a link back from a high authority site. Although Matt Cutts said in 2014 that guest posting for SEO is dead, that’s not true if you plan your approach. The problem is when you try to submit guest posts just for the sake of getting backlinks. Most reputable sites don’t allow that anymore. To get guest posting right, you need to make sure that you’re creating content that has value. So it needs to be relevant to the audience of your target site, and it should be helpful to them somehow. Your guest posts should be of exceptional quality in terms of writing, readability, and information. Not only does this improve your chances of getting accepted, but it also helps you gain authority in the niche. Plus, you will get to reach an engaged and relevant audience and later direct them to your site depending on how compelling your post is. Bottom lineSEO and affiliate marketing can work in perfect alignment if you strategically balance your efforts. These tips should help you get started with aligning the two aspects of your business. You will need some practice and experimentation before you can perfectly balance them. You can further explore more options and evolve your strategy as you get better at the essentials. Shane Barker is a Digital Strategist, Brand and Influencer Consultant. He can be found on Twitter @shane_barker. The post How to perfectly balance affiliate marketing and SEO appeared first on Search Engine Watch. from https://searchenginewatch.com/2019/04/11/how-to-perfectly-balance-affiliate-marketing-and-seo/ Your international marketing campaigns hinge on one crucial element: how well you have understood your audience. As with all marketing, insight into the user behavior, preferences and needs of your market is a must. However, if you do not have feet on the ground in these markets, you may be struggling to understand why your campaigns are not hitting the mark. Thankfully you have a goldmine of data about your customers’ interests, behavior, and demographics already at your fingertips. Wherever your international markets are, Google Analytics should be your first destination for drawing out actionable insights. Setting up Google Analytics for international insightGoogle Analytics is a powerful tool but the sheer volume of data available through it can make finding usable insights tough. The first step for getting the most out of Google Analytics is ensuring it has been set up in the most effective way. This needs to encompass the following: Also read: An SEO’s guide to Google Analytics terms 1. Setting up views for geographic regionsDepending on your current Google Analytics set-up you may already have more than one profile and view for your website data. What insight you want to get from your data will influence how you set up this first stage of filtering. If you want to understand how the French pages are being accessed and interacted with then you may wish to create a filter based on the folder structure of your site, such as the “/fr-fr/” sub-folder of your site. However, this will show you information on visitors who arrive on these pages from any geographic location. If your hreflang tags aren’t correct and Google is serving your French pages to a Canadian audience, then you will be seeing Canadian visitors’ data under this filter too. If you are interested in only seeing how French visitors interact with the website, no matter where on the site they end up, then a geographic filter is better. Here’s an example.

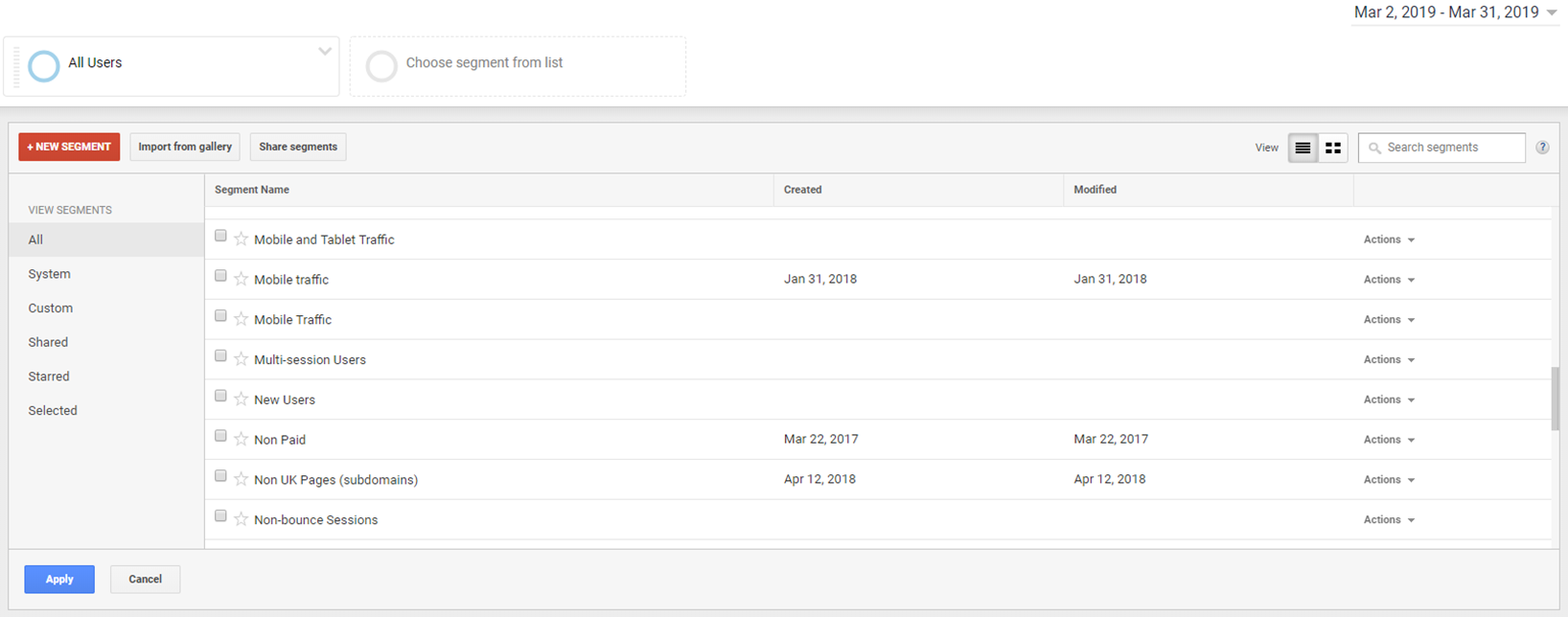

2. Setting up segments per target areaAnother way of being able to identify how users from different locations are responding to your website and digital marketing is by setting up segments within Google Analytics based on user demographics. Segments enable you to see a subset of your data that, unlike filters, don’t permanently alter the data you are viewing. Segments will allow you to narrow down your user data based on a variety of demographics, such as which campaign led them to the website, the language in which they are viewing the content, and their age. To set up a segment in Google Analytics click on “All Users” at the top of the screen. This will bring up all of the segments currently available in your account.

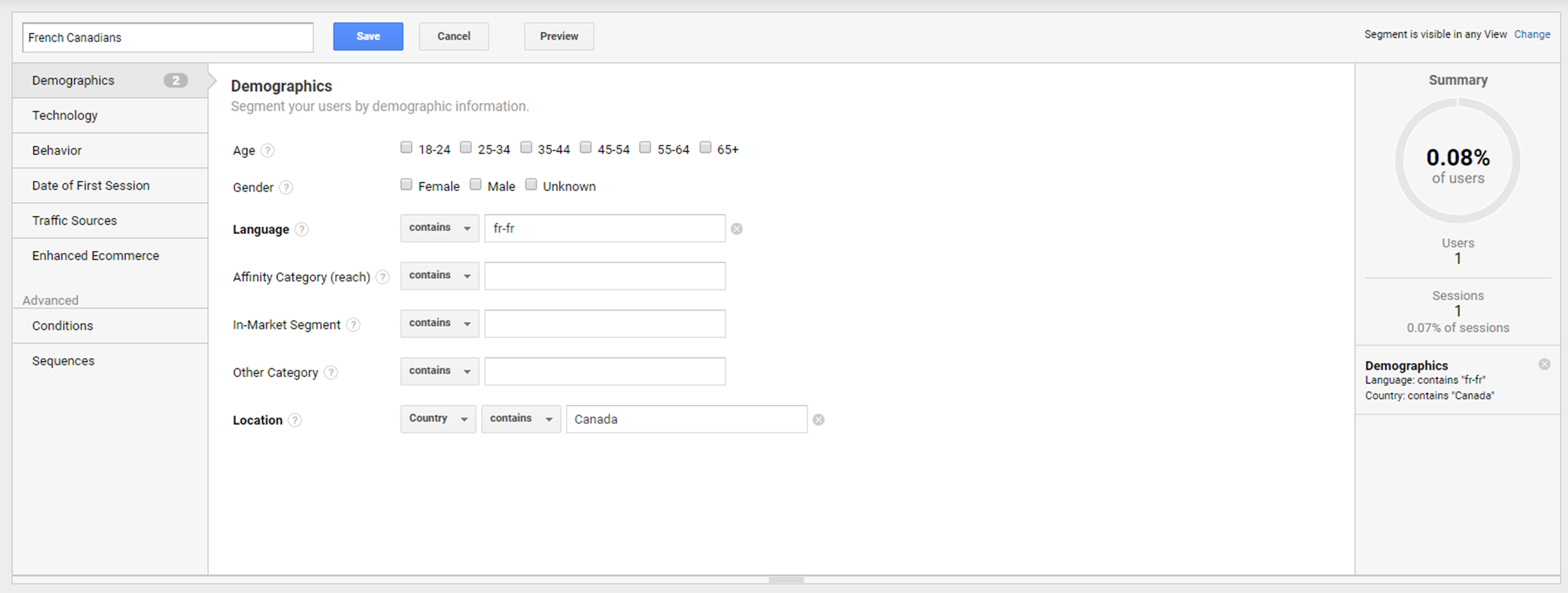

To create a new segment click “New Segment” and configure the fields to include or exclude the relevant visitors from your data. For instance, to get a better idea of how French-Canadian visitors interact with your website you might create a segment that only includes French-speaking Canadians. To do this you can set your demographics to include “fr-fr” in the “Language” field and “Canada” in the “Location” field.

Use the demographic fields to tailor your segment to include visitors from certain locations speaking specific languages. The segment “Summary” will give you an indication of what proportion of your visitors would be included in this segment which will help you sense-check if you have set it up correctly. Once you have saved your new segment it will be available for you to overlay onto your data from any time period, even from before you set up the segment. This is unlike filters, which will only apply to data recorded after the filter was created. Also read: A guide to the standard reports in Google Analytics – Audience reports 3. Ensuring your channels are recording correctlyA common missing step to setting your international targeting up on Google Analytics is ensuring the entry points for visitors onto your site are tracking correctly. For instance, there are a variety of international search engines that Google Analytics counts as “referral” sources rather than organic traffic sources unless a filter is added to change this. The best way to identify this is to review the websites listed as having driven traffic to your website, follow the path – Acquisition > All Traffic > Referrals. If you identify search engines among this list then there are a couple of solutions available to make sure credit for your marketing success is being assigned correctly. First, visit the “Organic Search Sources” section in Google Analytics which can be found under Admin > Property > Organic Search Sources.

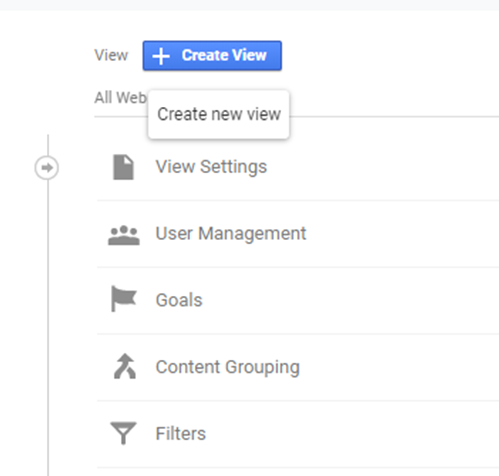

From here, you can simply add the referring domain of the search engine that is being recorded as a “referral” to the form. Google Analytics should start tracking traffic from that source as organic. Simple. Unfortunately, it doesn’t always work for every search engine. If you find the “Organic Search Sources” solution isn’t working, filters are a fool-proof solution but be warned, this will alter all your data in Google Analytics from the point the filter is put in place. Unless you have a separate unfiltered view available (which is highly recommended) then the data will not be recoverable and you may struggle to get an accurate comparison with data prior to the filter implementation. To set up a view without a filter you simply need to navigate to “Admin” and under “View” click “Create View”.

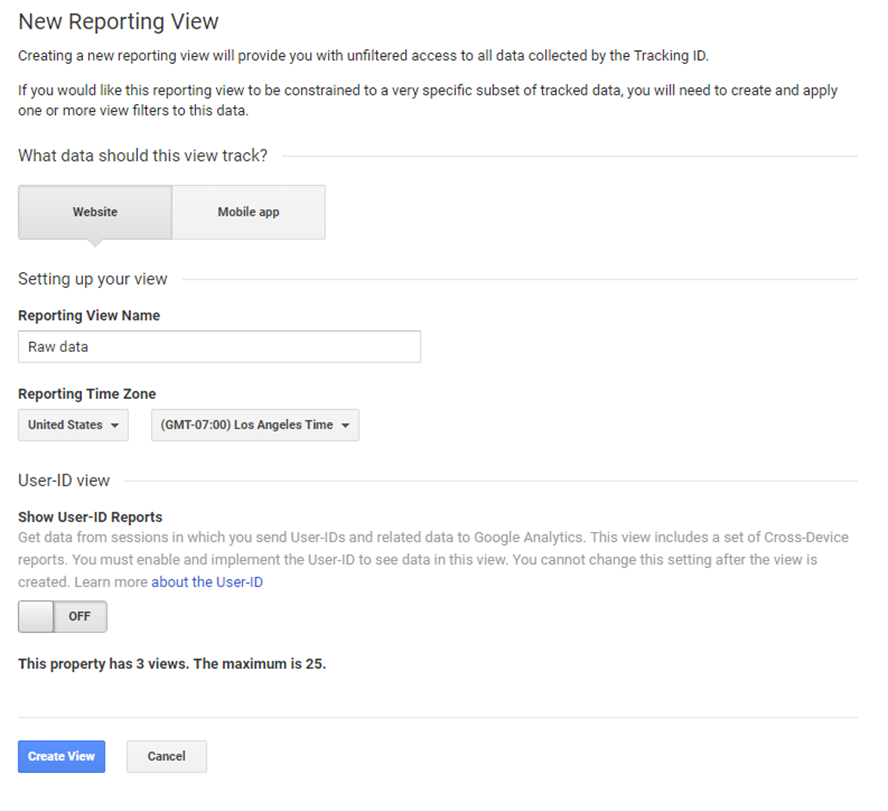

Name your unfiltered view “Raw data” or similar that will remind you that this view needs to remain free of filters.

To add a filter to the Google Analytics view that you want to have more accurate data in, go to “Filters” under the “View” that you want the data to be corrected for. Click “Add Filter” and select the “Custom” option. To change traffic from referral to organic, copy the below settings: Filter Type: Advanced Field A – Extract A: Referral (enter the domain of the website you want to reclassify traffic from) Field B – Extract B: Campaign Medium – referral Output To – Constructor: Campaign Medium – organic Then ensure the “Field A Required”, “Field B Required”, and “Override Output Field” options are selected. You may also notice the social media websites are listed among the referral sources. The same filter process applies to them. Just enter “social” rather than “organic” under the “Output To” field.

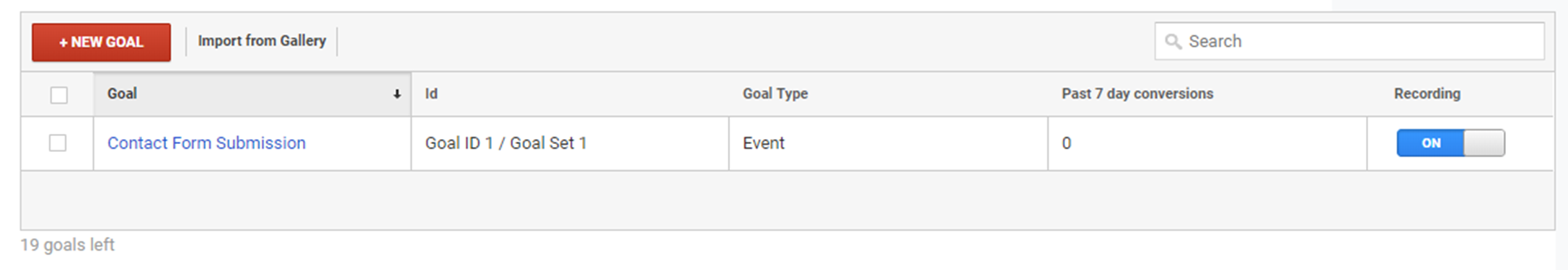

4. Setting goals per user groupOnce you have a better idea of how users from different locations use your website you may want to set up some independent goals specific to those users in Google Analytics. This could be, for example, a measure of how many visitors download a PDF in Chinese. This goal might not be pertinent to your French visitors’ view, but it is a very important measure of how well your website content is performing for your Chinese audience. The goals are simple to create in Google Analytics, just navigate to “Admin”, and under the view that you want to add the goal to click “Goals”. This will bring up a screen that displays any current goals set up in your view and, if you have edit level permissions in Google Analytics, you can create a new one by clicking “New Goal”.

Once you have selected “New Goal” you will be given the option of setting up a goal from a template or creating a custom one. It is likely that you will need to configure a custom goal in order to track specific actions based off of events or page destinations. For example, if you are measuring how many people download a PDF you may track the “Download” button click events, or you may create a goal based on visitors going to the “Thank you” page that is displayed once a PDF is downloaded.

Most goals will need to be custom ones that allow you to track visitors completing specific events or navigating to destination pages. With the number of goals you can set up under each view (which is limited to 20), it is likely that your goals will be different under each in order to drive the most relevant insight. 5. Filtering tables by locationAn easy way to determine location-specific user behavior is using the geographic dimensions to further drill-down into the data that you are viewing. For instance, if you run an experiential marketing campaign in Paris to promote awareness of your products, then viewing the traffic that went to the French product pages of your website that day compared to a previous day could give you an indicator of success. However, what would be even more useful would be to see if interest in the website spiked for visitors from Paris. By applying “City” as a secondary dimension on the table of data you are looking at how you can get a more specific overview of how well the campaign performed in that region.

Dimensions available include “Continent”, “Sub-continent” “Country”, “Region”, and “City”, as well as being able to split the data by “Language”. Also read: How to integrate SEO into the translation process to maximize global success Drawing intelligence from your dataOnce you have your goals set up correctly you will be able to drill much further down into the data Google Analytics is presenting you with. An overview of how international users are navigating your site, interacting with content and their pain points is valuable in determining how to better optimize your website and marketing campaigns for conversion. 1. Creating personasMany organizations will have created user personas at one stage or another, but it is valuable to review them periodically to ensure they are still relevant in the light of changes to your organization or the digital landscape. It is imperative that your geographic targeting has been set up correctly in Google Analytics to ensure your personas drive insight into your international marketing campaigns. Creating personas using Google Analytics ensures they are based on real visitors who land on your website. This article from my agency, Avenue Digital, gives you step by step guidance on how to use your Google Analytics data to create personas, and how to use them for SEO. 2. Successful advertising mediumsOne tip for maximizing the data in Google Analytics is discerning what the most profitable advertising medium is for that demographic. If you notice that a lot of your French visitors are coming to the website as a result of a PPC campaign advertising your products, but the traffic that converts the most is actually from Twitter, then you can focus on expanding your social media reach in that region. This may not be the same for your UK visitors who might arrive on the site and convert most from organic search results. With the geographic targeting set up correctly in Google Analytics, you will be able to focus your time and budgets more effectively for each of your target regions, rather than employing a blanket approach based on unfiltered data. 3. LanguageDetermining the best language to provide your marketing campaigns and website may not be as simple as identifying the primary language for each country you are targeting. For example, Belgium has three official languages – Dutch, German, and French. Google Analytics can help you narrow down which of these languages is primarily used by the demographic that interacts with you the most online. If you notice that there are a lot of visitors from French-speaking countries landing on your website, but it is only serving content in English, then this forms a good base for diversifying the content on your site. 4. Checking the correctness of your online international targetingAn intricate and easy to get wrong aspect of international marketing is signaling to the search engines what content you want available to searchers in different regions. Google Analytics allows you to audit how well international targeting has been understood and respected by the search engines. If you have filtered your data by a geographic section of your website, like, /en-gb/ but a high proportion of your organic traffic landing on this section of the site is from countries that have their own specified pages on the site, then this would suggest that your hreflang tags may need checking. 5. Identifying emerging marketsGoogle Analytics could help identify other markets that are not being served by your current products, website or marketing campaigns that could prove very fruitful if tapped into. If through your analysis you notice that there is a large volume of visitors from a country you don’t currently serve then you can begin investigations into the viability of expanding into those markets. ConclusionAs complex as Google Analytics may seem, once you have set it up right expect to get clarity over your data, as it makes drilling down into detail for each of your markets an easy job. The awareness into your markets you gain can be the difference between your digital marketing efforts soaring or falling flat. Helen Pollitt is the Head of SEO Avenue Digital. She can be found on Twitter @HelenPollitt1. The post How to get international insights from Google Analytics appeared first on Search Engine Watch. from https://searchenginewatch.com/2019/04/10/how-to-get-international-insights-from-google-analytics/ JavaScript-powered websites are here to stay. As JavaScript in its many frameworks becomes an ever more popular resource for modern websites, SEOs must be able to guarantee their technical implementation is search engine-friendly. In this article, we will focus on how to optimize JS-websites for Google (although Bing also recommends the same solution, dynamic rendering). The content of this article includes: 1. JavaScript challenges for SEO 2. Client-side and server-side rendering 3. How Google crawls websites 4. How to detect client-side rendered content 5. The solutions: Hybrid rendering and dynamic rendering 1. JavaScript challenges for SEOReact, Vue, Angular, Node, and Polymer. If at least one of these fancy names rings a bell, then most likely you are already dealing with a JavaScript-powered website. All these JavaScript frameworks provide great flexibility and power to modern websites. They open a large range of possibilities in terms of client-side rendering (like allowing the page to be rendered by the browser instead of the server), page load capabilities, dynamic-content, user-interaction, and extended functionalities. If we only look at what has an impact on SEO, JavaScript frameworks can do the following for a website:

Unfortunately, if implemented without using a pair of SEO lenses, JavaScript frameworks can pose serious challenges to the page performance, ranging from speed deficiencies to render-blocking issues, or even hindering crawlability of content and links. There are many aspects that SEOs must look after when auditing a JavaScript-powered web page, which can be summarized as follows:

A lot of questions to answer. So where should an SEO start? Below are key guidelines to the optimization of JS-websites, to enable the usage of these frameworks while keeping the search engine bots happy. 2. Client-side and server-side rendering: The best “frenemies”Probably the most important pieces of knowledge all SEOs need when they have to cope with JS-powered websites is the concepts of client-side and server-side rendering. Understanding the differences, benefits, and disadvantages of both are critical to deploying the right SEO strategy and not getting lost when speaking with software engineers (who eventually are the ones in charge of implementing that strategy). Let’s look at how Googlebot crawls and indexes pages, putting it as a very basic sequential process:

1. The client (web browser) places several requests to the server, in order to download all the necessary information that will eventually display the page. Usually, the very first request concerns the static HTML document. 2. The CSS and JS files, referred to by the HTML document, are then downloaded: these are the styles, scripts and services that contribute to generating the page. 3. The Website Rendering Service (WRS) parses and executes the JavaScript (which can manage all or part of the content or just a simple functionality).

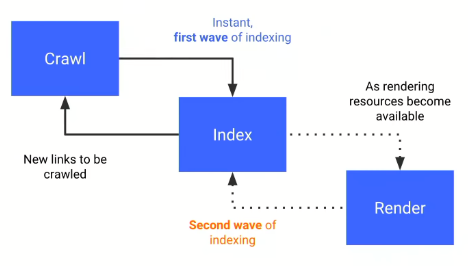

4. Caffeine (Google’s indexer) indexes the content found New links are discovered within the content for further crawling This is the theory, but in the real world, Google doesn’t have infinite resources and has to do some prioritization in the crawling. 3. How Google actually crawls websitesGoogle is a very smart search engine with very smart crawlers. However, it usually adopts a reactive approach when it comes to new technologies applied to web development. This means that it is Google and its bots that need to adapt to the new frameworks as they become more and more popular (which is the case with JavaScript). For this reason, the way Google crawls JS-powered websites is still far from perfect, with blind spots that SEOs and software engineers need to mitigate somehow. This is in a nutshell how Google actually crawls these sites:

The above graph was shared by Tom Greenaway in Google IO 2018 conference, and what it basically says is – If you have a site that relies heavily on JavaScript, you’d better load the JS-content very quickly, otherwise we will not be able to render it (hence index it) during the first wave, and it will be postponed to a second wave, which no one knows when may occur. Therefore, your client-side rendered content based on JavaScript will probably be rendered by the bots in the second wave, because during the first wave they will load your server-side content, which should be fast enough. But they don’t want to spend too many resources and take on too many tasks. In Tom Greenaway’s words:

Implications for SEO are huge, your content may not be discovered until one, two or even five weeks later, and in the meantime, only your content-less page would be assessed and ranked by the algorithm. What an SEO should be most worried about at this point is this simple equation: No content is found = Content is (probably) hardly indexable And how would a content-less page rank? Easy to guess for any SEO. So far so good. The next step is learning if the content is rendered client-side or server-side (without asking software engineers). 4. How to detect client-side rendered contentOption one: The Document Object Model (DOM)There are several ways to know it, and for this, we need to introduce the concept of DOM. The Document Object Model defines the structure of an HTML (or an XML) document, and how such documents can be accessed and manipulated. In SEO and software engineering we usually refer to the DOM as the final HTML document rendered by the browser, as opposed to the original static HTML document that lives in the server. You can think of the HTML as the trunk of a tree. You can add branches, leaves, flowers, and fruits to it (that is the DOM). What JavaScript does is manipulate the HTML and create an enriched DOM that adds up functionalities and content. In practice, you can check the static HTML by pressing “Ctrl+U” on any page you are looking at, and the DOM by “Inspecting” the page once it’s fully loaded. Most of the times, for modern websites, you will see that the two documents are quite different. Option two: JS-free Chrome profileCreate a new profile in Chrome and disallow JavaScript through the content settings (access them directly here – Chrome://settings/content). Any URL you browse with this profile will not load any JS content. Therefore, any blank spot in your page identifies a piece of content that is served client-side. Option three: Fetch as Google in Google Search ConsoleProvided that your website is registered in Google Search Console (I can’t think of any good reason why it wouldn’t be), use the “Fetch as Google” tool in the old version of the console. This will return a rendering of how Googlebot sees the page and a rendering of how a normal user sees it. Many differences there? Option four: Run Chrome version 41 in headless mode (Chromium)Google officially stated in early 2018 that they use an older version of Chrome (specifically version 41, which anyone can download from here) in headless mode to render websites. The main implication is that a page that doesn’t render well in that version of Chrome can be subject to some crawling-oriented problems. Option five: Crawl the page on Screaming Frog using GooglebotAnd with the JavaScript rendering option disabled. Check if the content and meta-content are rendered correctly by the bot. After all these checks, still, ask your software engineers because you don’t want to leave any loose ends. 5. The solutions: Hybrid rendering and dynamic renderingAsking a software engineer to roll back a piece of great development work because it hurts SEO can be a difficult task. It happens frequently that SEOs are not involved in the development process, and they are called in only when the whole infrastructure is in place. We SEOs should all work on improving our relationship with software engineers and make them aware of the huge implications that any innovation can have on SEO. This is how a problem like content-less pages can be avoided from the get-go. The solution resides on two approaches. Hybrid renderingAlso known as Isomorphic JavaScript, this approach aims to minimize the need for client-side rendering, and it doesn’t differentiate between bots and real users. Hybrid rendering suggests the following:

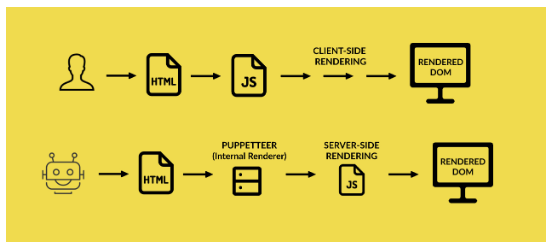

Dynamic renderingThis approach aims to detect requests placed by a bot vs the ones placed by a user and serves the page accordingly.

The best of both worldsCombining the two solutions can also provide great benefit to both users and bots.

ConclusionAs the use of JavaScript in modern websites is growing every day, through many light and easy frameworks, it requires software engineers to solely rely on HTML to please search engine bots which are not realistic nor feasible. However, the SEO issues raised by client-side rendering solutions can be successfully tackled in different ways using hybrid rendering and dynamic rendering. Knowing the technology available, your website infrastructure, your engineers, and the solutions can guarantee the success of your SEO strategy even in complicated environments such as JavaScript-powered websites.

Giorgio Franco is a Senior Technical SEO Specialist at Vistaprint.

The post A survival kit for SEO-friendly JavaScript websites appeared first on Search Engine Watch. from https://searchenginewatch.com/2019/04/09/a-survival-kit-for-seo-friendly-javascript-websites/ When SEO Was EasyWhen I got started on the web over 15 years ago I created an overly broad & shallow website that had little chance of making money because it was utterly undifferentiated and crappy. In spite of my best (worst?) efforts while being a complete newbie, sometimes I would go to the mailbox and see a check for a couple hundred or a couple thousand dollars come in. My old roommate & I went to Coachella & when the trip was over I returned to a bunch of mail to catch up on & realized I had made way more while not working than what I spent on that trip. What was the secret to a total newbie making decent income by accident? Horrible spelling. Back then search engines were not as sophisticated with their spelling correction features & I was one of 3 or 4 people in the search index that misspelled the name of an online casino the same way many searchers did. The high minded excuse for why I did not scale that would be claiming I knew it was a temporary trick that was somehow beneath me. The more accurate reason would be thinking in part it was a lucky fluke rather than thinking in systems. If I were clever at the time I would have created the misspeller's guide to online gambling, though I think I was just so excited to make anything from the web that I perhaps lacked the ambition & foresight to scale things back then. In the decade that followed I had a number of other lucky breaks like that. One time one of the original internet bubble companies that managed to stay around put up a sitewide footer link targeting the concept that one of my sites made decent money from. This was just before the great recession, before Panda existed. The concept they targeted had 3 or 4 ways to describe it. 2 of them were very profitable & if they targeted either of the most profitable versions with that page the targeting would have sort of carried over to both. They would have outranked me if they targeted the correct version, but they didn't so their mistargeting was a huge win for me. Search Gets ComplexSearch today is much more complex. In the years since those easy-n-cheesy wins, Google has rolled out many updates which aim to feature sought after destination sites while diminishing the sites which rely one "one simple trick" to rank. Arguably the quality of the search results has improved significantly as search has become more powerful, more feature rich & has layered in more relevancy signals. Many quality small web publishers have went away due to some combination of increased competition, algorithmic shifts & uncertainty, and reduced monetization as more ad spend was redirected toward Google & Facebook. But the impact as felt by any given publisher is not the impact as felt by the ecosystem as a whole. Many terrible websites have also went away, while some formerly obscure though higher-quality sites rose to prominence. There was the Vince update in 2009, which boosted the rankings of many branded websites. Then in 2011 there was Panda as an extension of Vince, which tanked the rankings of many sites that published hundreds of thousands or millions of thin content pages while boosting the rankings of trusted branded destinations. Then there was Penguin, which was a penalty that hit many websites which had heavily manipulated or otherwise aggressive appearing link profiles. Google felt there was a lot of noise in the link graph, which was their justification for the Penguin. There were updates which lowered the rankings of many exact match domains. And then increased ad load in the search results along with the other above ranking shifts further lowered the ability to rank keyword-driven domain names. If your domain is generically descriptive then there is a limit to how differentiated & memorable you can make it if you are targeting the core market the keywords are aligned with. There is a reason eBay is more popular than auction.com, Google is more popular than search.com, Yahoo is more popular than portal.com & Amazon is more popular than a store.com or a shop.com. When that winner take most impact of many online markets is coupled with the move away from using classic relevancy signals the economics shift to where is makes a lot more sense to carry the heavy overhead of establishing a strong brand. Branded and navigational search queries could be used in the relevancy algorithm stack to confirm the quality of a site & verify (or dispute) the veracity of other signals. Historically relevant algo shortcuts become less appealing as they become less relevant to the current ecosystem & even less aligned with the future trends of the market. Add in negative incentives for pushing on a string (penalties on top of wasting the capital outlay) and a more holistic approach certainly makes sense. Modeling Web Users & Modeling LanguagePageRank was an attempt to model the random surfer. When Google is pervasively monitoring most users across the web they can shift to directly measuring their behaviors instead of using indirect signals. Years ago Bill Slawski wrote about the long click in which he opened by quoting Steven Levy's In the Plex: How Google Thinks, Works, and Shapes our Lives

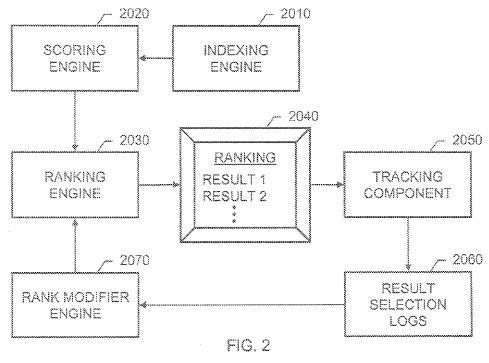

Of course, there's a patent for that. In Modifying search result ranking based on implicit user feedback they state:

If you are a known brand you are more likely to get clicked on than a random unknown entity in the same market. And if you are something people are specifically seeking out, they are likely to stay on your website for an extended period of time.

Attempts to manipulate such data may not work.

And just like Google can make a matrix of documents & queries, they could also choose to put more weight on search accounts associated with topical expert users based on their historical click patterns.

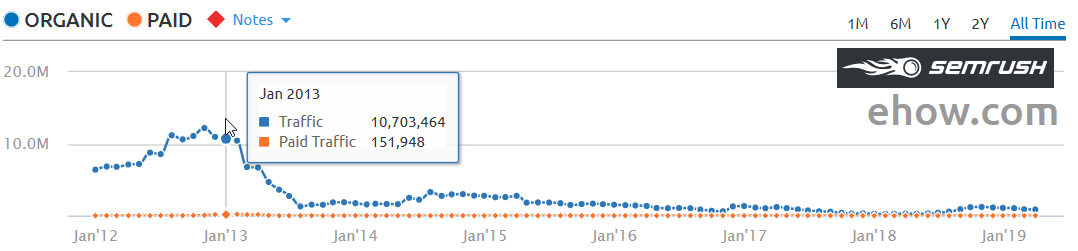

Google was using click data to drive their search rankings as far back as 2009. David Naylor was perhaps the first person who publicly spotted this. Google was ranking Australian websites for [tennis court hire] in the UK & Ireland, in part because that is where most of the click signal came from. That phrase was most widely searched for in Australia. In the years since Google has done a better job of geographically isolating clicks to prevent things like the problem David Naylor noticed, where almost all search results in one geographic region came from a different country. Whenever SEOs mention using click data to search engineers, the search engineers quickly respond about how they might consider any signal but clicks would be a noisy signal. But if a signal has noise an engineer would work around the noise by finding ways to filter the noise out or combine multiple signals. To this day Google states they are still working to filter noise from the link graph: "We continued to protect the value of authoritative and relevant links as an important ranking signal for Search." The site with millions of inbound links, few intentional visits & those who do visit quickly click the back button (due to a heavy ad load, poor user experience, low quality content, shallow content, outdated content, or some other bait-n-switch approach)...that's an outlier. Preventing those sorts of sites from ranking well would be another way of protecting the value of authoritative & relevant links. Best Practices Vary Across Time & By Market + CategoryAlong the way, concurrent with the above sorts of updates, Google also improved their spelling auto-correct features, auto-completed search queries for many years through a featured called Google Instant (though they later undid forced query auto-completion while retaining automated search suggestions), and then they rolled out a few other algorithms that further allowed them to model language & user behavior. Today it would be much harder to get paid above median wages explicitly for sucking at basic spelling or scaling some other individual shortcut to the moon, like pouring millions of low quality articles into a (formerly!) trusted domain. Nearly a decade after Panda, eHow's rankings still haven't recovered. Back when I got started with SEO the phrase Indian SEO company was associated with cut-rate work where people were buying exclusively based on price. Sort of like a "I got a $500 budget for link building, but can not under any circumstance invest more than $5 in any individual link." Part of how my wife met me was she hired a hack SEO from San Diego who outsourced all the work to India and marked the price up about 100-fold while claiming it was all done in the United States. He created reciprocal links pages that got her site penalized & it didn't rank until after she took her reciprocal links page down. With that sort of behavior widespread (hack US firm teaching people working in an emerging market poor practices), it likely meant many SEO "best practices" which were learned in an emerging market (particularly where the web was also underdeveloped) would be more inclined to being spammy. Considering how far ahead many Western markets were on the early Internet & how India has so many languages & how most web usage in India is based on mobile devices where it is hard for users to create links, it only makes sense that Google would want to place more weight on end user data in such a market. If you set your computer location to India Bing's search box lists 9 different languages to choose from. The above is not to state anything derogatory about any emerging market, but rather that various signals are stronger in some markets than others. And competition is stronger in some markets than others. Search engines can only rank what exists.

Impacting the Economics of PublishingNow search engines can certainly influence the economics of various types of media. At one point some otherwise credible media outlets were pitching the Demand Media IPO narrative that Demand Media was the publisher of the future & what other media outlets will look like. Years later, after heavily squeezing on the partner network & promoting programmatic advertising that reduces CPMs by the day Google is funding partnerships with multiple news publishers like McClatchy & Gatehouse to try to revive the news dead zones even Facebook is struggling with.

As mainstream newspapers continue laying off journalists, Facebook's news efforts are likely to continue failing unless they include direct economic incentives, as Google's programmatic ad push broke the banner ad:

Google is offering news publishers audience development & business development tools. Heavy Investment in Emerging Markets Quickly Evolves the MarketsAs the web grows rapidly in India, they'll have a thousand flowers bloom. In 5 years the competition in India & other emerging markets will be much tougher as those markets continue to grow rapidly. Media is much cheaper to produce in India than it is in the United States. Labor costs are lower & they never had the economic albatross that is the ACA adversely impact their economy. At some point the level of investment & increased competition will mean early techniques stop having as much efficacy. Chinese companies are aggressively investing in India.

RankBrainRankBrain appears to be based on using user clickpaths on head keywords to help bleed rankings across into related searches which are searched less frequently. A Googler didn't state this specifically, but it is how they would be able to use models of searcher behavior to refine search results for keywords which are rarely searched for. In a recent interview in Scientific American a Google engineer stated: "By design, search engines have learned to associate short queries with the targets of those searches by tracking pages that are visited as a result of the query, making the results returned both faster and more accurate than they otherwise would have been." Now a person might go out and try to search for something a bunch of times or pay other people to search for a topic and click a specific listing, but some of the related Google patents on using click data (which keep getting updated) mentioned how they can discount or turn off the signal if there is an unnatural spike of traffic on a specific keyword, or if there is an unnatural spike of traffic heading to a particular website or web page. And, since Google is tracking the behavior of end users on their own website, anomalous behavior is easier to track than it is tracking something across the broader web where signals are more indirect. Google can take advantage of their wide distribution of Chrome & Android where users are regularly logged into Google & pervasively tracked to place more weight on users where they had credit card data, a long account history with regular normal search behavior, heavy Gmail users, etc. Plus there is a huge gap between the cost of traffic & the ability to monetize it. You might have to pay someone a dime or a quarter to search for something & there is no guarantee it will work on a sustainable basis even if you paid hundreds or thousands of people to do it. Any of those experimental searchers will have no lasting value unless they influence rank, but even if they do influence rankings it might only last temporarily. If you bought a bunch of traffic into something genuine Google searchers didn't like then even if it started to rank better temporarily the rankings would quickly fall back if the real end user searchers disliked the site relative to other sites which already rank. This is part of the reason why so many SEO blogs mention brand, brand, brand. If people are specifically looking for you in volume & Google can see that thousands or millions of people specifically want to access your site then that can impact how you rank elsewhere. Even looking at something inside the search results for a while (dwell time) or quickly skipping over it to have a deeper scroll depth can be a ranking signal. Some Google patents mention how they can use mouse pointer location on desktop or scroll data from the viewport on mobile devices as a quality signal. Neural MatchingLast year Danny Sullivan mentioned how Google rolled out neural matching to better understand the intent behind a search query.

The above Tweets capture what the neural matching technology intends to do. Google also stated:

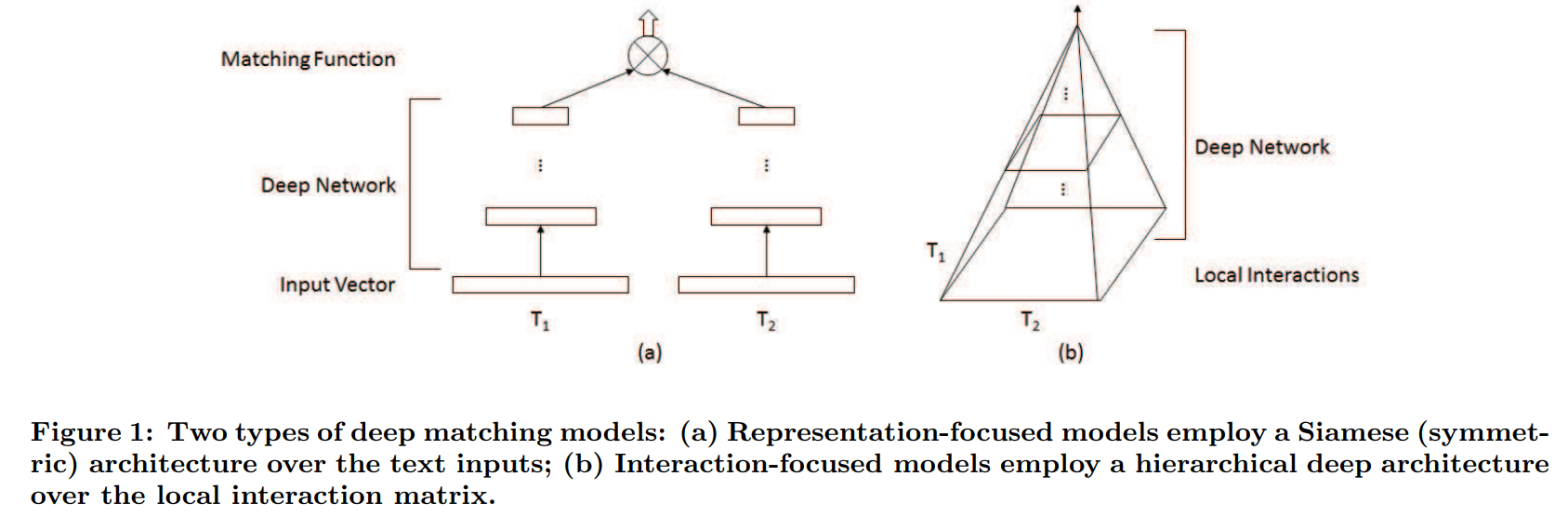

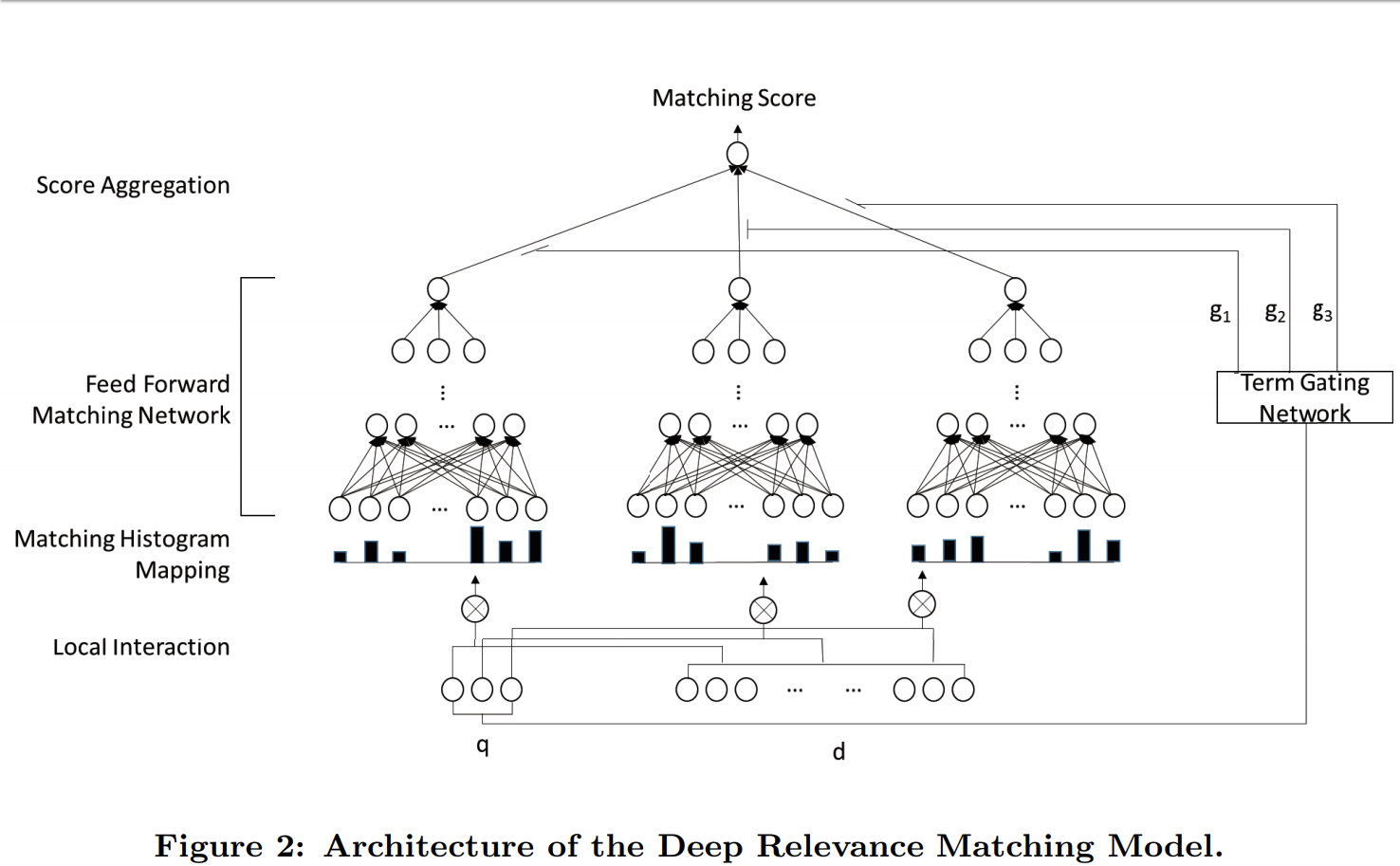

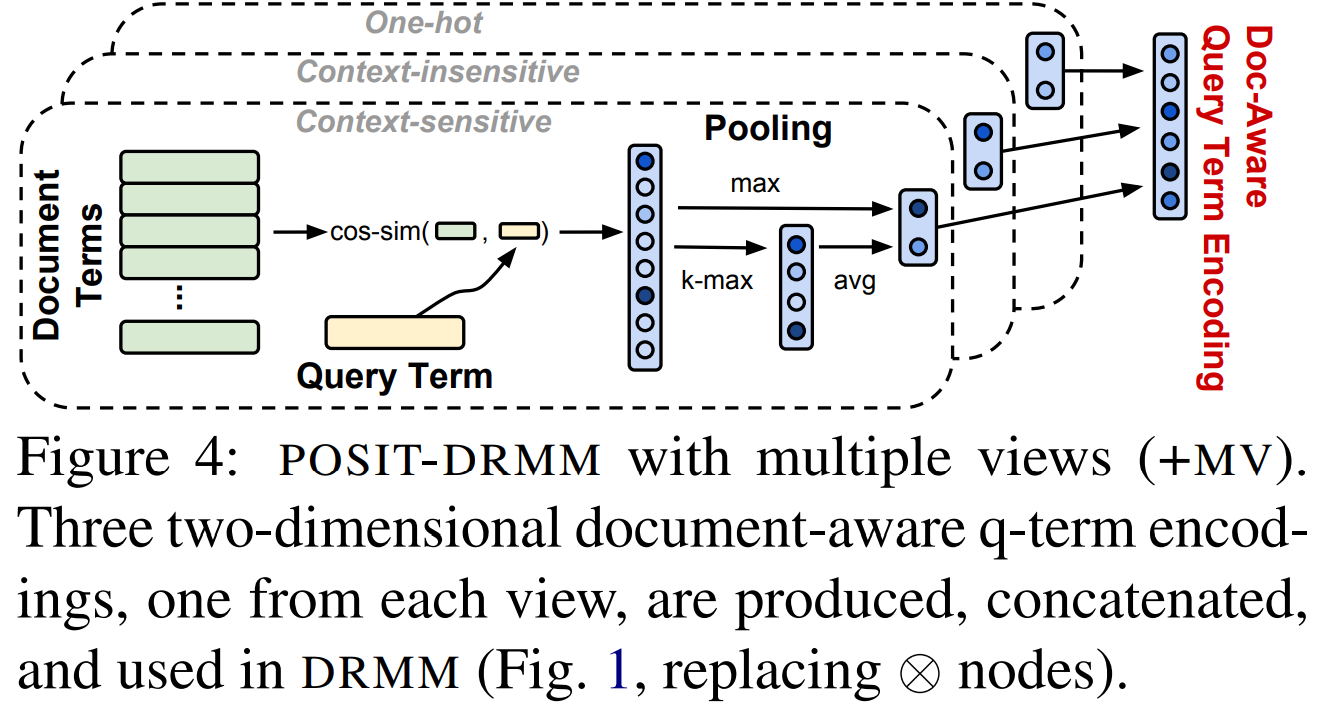

To help people understand the difference between neural matching & RankBrain, Google told SEL: "RankBrain helps Google better relate pages to concepts. Neural matching helps Google better relate words to searches." There are a couple research papers on neural matching. The first one was titled A Deep Relevance Matching Model for Ad-hoc Retrieval. It mentioned using Word2vec & here are a few quotes from the research paper

The paper mentions how semantic matching falls down when compared against relevancy matching because:

Here are a couple images from the above research paper

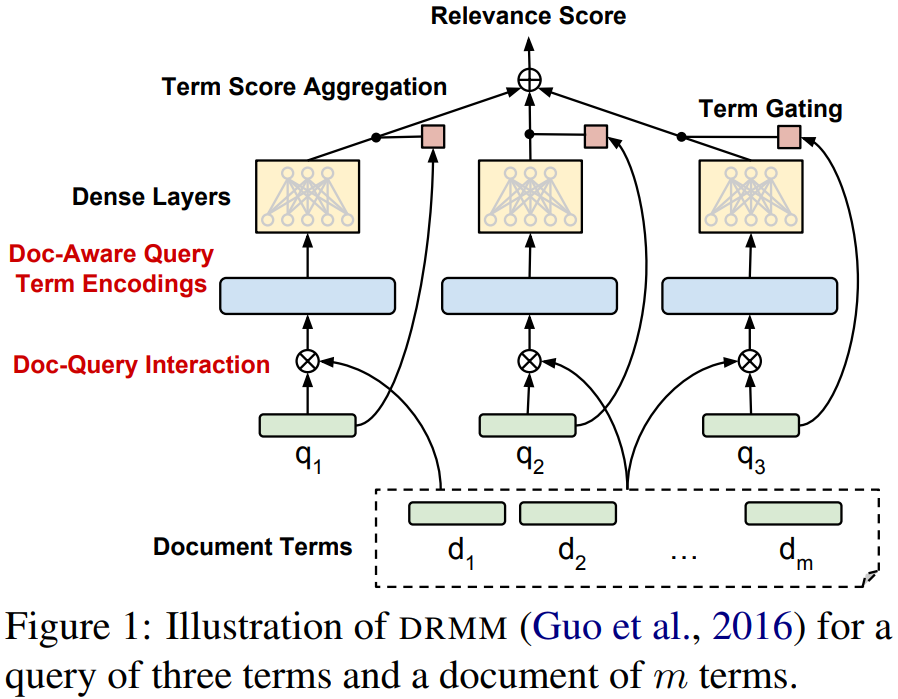

And then the second research paper is Deep Relevancy Ranking Using Enhanced Dcoument-Query Interactions That same sort of re-ranking concept is being better understood across the industry. There are ranking signals that earn some base level ranking, and then results get re-ranked based on other factors like how well a result matches the user intent. Here are a couple images from the above research paper.

For those who hate the idea of reading research papers or patent applications, Martinibuster also wrote about the technology here. About the only part of his post I would debate is this one:

I think one should always consider user experience over other factors, however a person could still use variations throughout the copy & pick up a bit more traffic without coming across as spammy. Danny Sullivan mentioned the super synonym concept was impacting 30% of search queries, so there are still a lot which may only be available to those who use a specific phrase on their page. Martinibuster also wrote another blog post tying more research papers & patents to the above. You could probably spend a month reading all the related patents & research papers. The above sort of language modeling & end user click feedback compliment links-based ranking signals in a way that makes it much harder to luck one's way into any form of success by being a terrible speller or just bombing away at link manipulation without much concern toward any other aspect of the user experience or market you operate in. Pre-penalized ShortcutsGoogle was even issued a patent for predicting site quality based upon the N-grams used on the site & comparing those against the N-grams used on other established site where quality has already been scored via other methods: "The phrase model can be used to predict a site quality score for a new site; in particular, this can be done in the absence of other information. The goal is to predict a score that is comparable to the baseline site quality scores of the previously-scored sites." Have you considered using a PLR package to generate the shell of your site's content? Good luck with that as some sites trying that shortcut might be pre-penalized from birth. Navigating the MazeWhen I started in SEO one of my friends had a dad who is vastly smarter than I am. He advised me that Google engineers were smarter, had more capital, had more exposure, had more data, etc etc etc ... and thus SEO was ultimately going to be a malinvestment. Back then he was at least partially wrong because influencing search was so easy. But in the current market, 16 years later, we are near the infection point where he would finally be right. At some point the shortcuts stop working & it makes sense to try a different approach. The flip side of all the above changes is as the algorithms have become more complex they have went from being a headwind to people ignorant about SEO to being a tailwind to those who do not focus excessively on SEO in isolation. If one is a dominant voice in a particular market, if they break industry news, if they have key exclusives, if they spot & name the industry trends, if their site becomes a must read & is what amounts to a habit ... then they perhaps become viewed as an entity. Entity-related signals help them & those signals that are working against the people who might have lucked into a bit of success become a tailwind rather than a headwind. If your work defines your industry, then any efforts to model entities, user behavior or the language of your industry are going to boost your work on a relative basis. This requires sites to publish frequently enough to be a habit, or publish highly differentiated content which is strong enough that it is worth the wait. Those which publish frequently without being particularly differentiated are almost guaranteed to eventually walk into a penalty of some sort. And each additional person who reads marginal, undifferentiated content (particularly if it has an ad-heavy layout) is one additional visitor that site is closer to eventually getting whacked. Success becomes self regulating. Any short-term success becomes self defeating if one has a highly opportunistic short-term focus. Those who write content that only they could write are more likely to have sustained success.

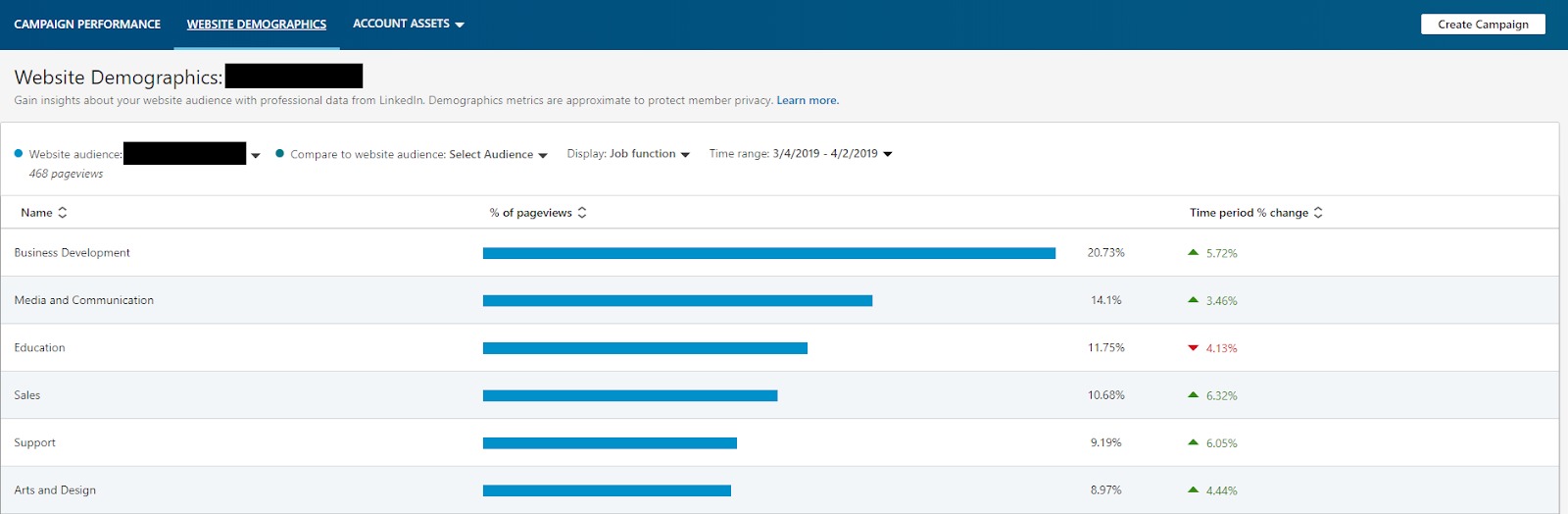

from http://www.seobook.com/keyword-not-provided-it-just-clicks When starting out a digital marketing program, you might not yet have a lot of internal data that helps you understand your target consumer. You might also have smaller budgets that do not allow for a large amount of audience research. So do you start throwing darts with your marketing? No way. It is critical to understand your target consumer to expand your audiences and segment them intelligently to engage them with effective messaging and creatives. Even at a limited budget, you have a few tools that can help you understand your target audience and the audience that you want to reach. We will walk through a few of these tools in further detail below. Five tools for audience research on a budgetTool #1 – In-platform insights (LinkedIn)If you already have a LinkedIn Ads account, you have a great place to gain insights on your target consumer, especially if you are a B2B lead generation business. In order to pull data on your target market, you must place the LinkedIn insight tag on your site. Once the tag has been placed, you will be able to start pulling audience data, which can be found on the website demographics tab. The insights provided include location, country, job function, job title, company, company industry, job seniority, and company size. You can look at the website as a whole or view specific pages on the site by creating website audiences. You can also compare the different audiences that you have created.

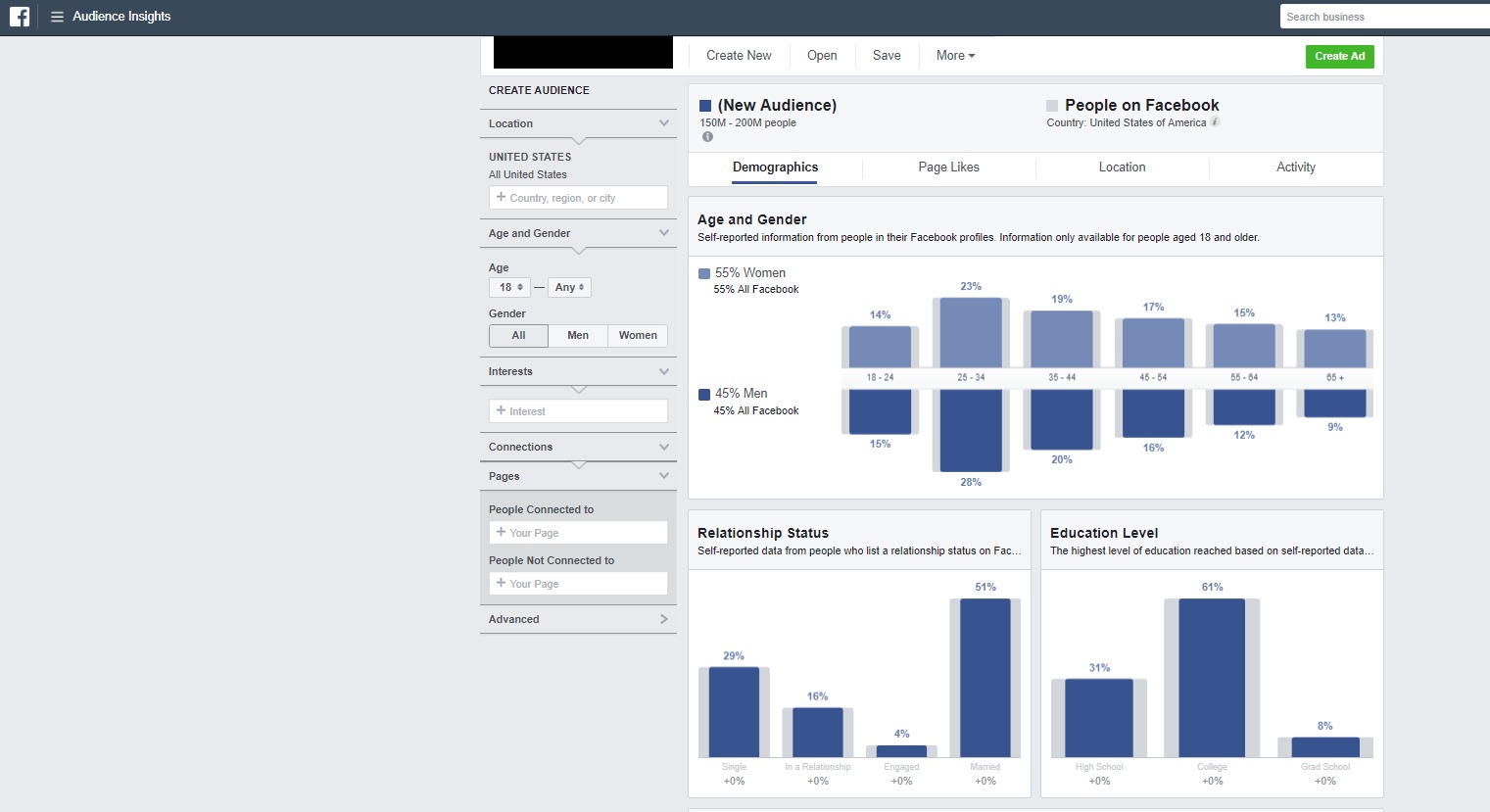

Tool #2 – In-platform insights (Facebook)Facebook’s Audience Insights tool allows you to gain more information about the audience interacting with your page. It also shows you the people interested in your competitors’ pages. You can see a range of information about people currently interacting with your page by selecting “People connected to your page.” To find out information about the users interacting with competitor pages, select “Interests” and type the competitor page or pages. The information that you can view includes age and gender, relationship status, education level, job title, page likes, location (cities, countries, and languages), and device used.

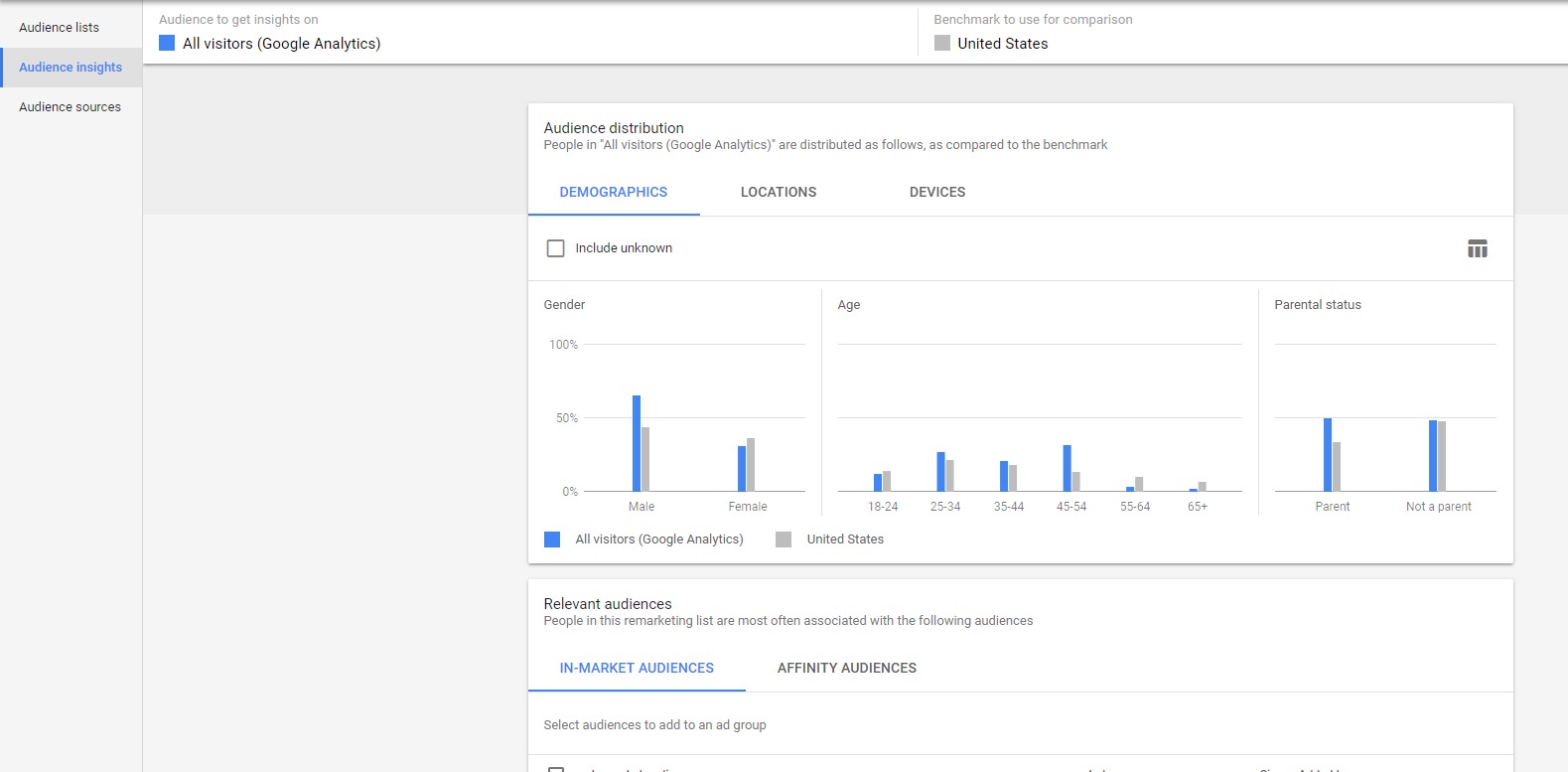

Tool #3 – In-platform insights (Google Customer Match)Google Customer Match is a great way to get insights on your customers if you have not yet run paid search or social campaigns. You can load in a customer email list and see data on your customers to include details like gender, age, parental status, location, and relevant Google Audiences (in-market audiences and affinity audiences). These are great options to layer onto your campaigns to gain more data and potentially bid up on these users or to target and bid in a separate campaign to stay competitive on broader terms that might be too expensive.

Tool #4 – External insights (competitor research)There are a few tools that help you conduct competitor research in paid search and paid social outside of the engines and internal data sources. SEMrush and SpyFu are great for understanding what search queries you are showing up for organically. These tools also allow you to do some competitive research to see what keywords competitors are bidding for, their ad copy, and the search queries they are showing up for organically. All of these will help you understand how your target consumer is interacting with your brand on the SERP. MOAT and AdEspresso are great tools to gain insights into how your competition portrays their brand on the Google Display Network (GDN) and Facebook. These tools will show you the ads that are currently running on GDN and Facebook, allowing you to further understand messaging and offers that are being used. Tool #5 – Internal data sourcesThere might not be a large amount of data in your CRM system, but you can still glean customer insights. Consider breaking down your data into different segments, including top customers, disqualified leads, highest AOV customers, and highest lifetime value customers. Once you define those segments, you can identify your most-desirable and least-desirable customer groups and bid/target accordingly. ConclusionWhether you’re just starting a digital marketing program or want to take a step back to understand your target audience without the benefit of a big budget, you have options. Dig into the areas defined in this post, and make sure that however you’re segmenting your audiences, you’re creating ads and messaging that most precisely speak to those segments. Lauren Crain is a Client Services Lead in 3Q Digital’s SMB division, 3Q Incubate. The post Five tools for audience research on a tiny budget appeared first on Search Engine Watch. from https://searchenginewatch.com/2019/04/08/five-tools-for-audience-research-on-a-tiny-budget/ Brian McCullough, who runs Internet History Podcast, also wrote a book named How The Internet Happened: From Netscape to the iPhone which did a fantastic job of capturing the ethos of the early web and telling the backstory of so many people & projects behind it's evolution. I think the quote which best the magic of the early web is

The part I bolded in the above quote from the book really captures the magic of the Internet & what pulled so many people toward the early web. The current web - dominated by never-ending feeds & a variety of closed silos - is a big shift from the early days of web comics & other underground cool stuff people created & shared because they thought it was neat. Many established players missed the actual direction of the web by trying to create something more akin to the web of today before the infrastructure could support it. Many of the "big things" driving web adoption relied heavily on chance luck - combined with a lot of hard work & a willingness to be responsive to feedback & data.

The book offers a lot of color to many important web related companies. And many companies which were only briefly mentioned also ran into the same sort of lucky breaks the above companies did. Paypal was heavily reliant on eBay for initial distribution, but even that was something they initially tried to block until it became so obvious they stopped fighting it:

Here is a podcast interview of Brian McCullough by Chris Dixon. How The Internet Happened: From Netscape to the iPhone is a great book well worth a read for anyone interested in the web.

Categories:

from http://www.seobook.com/how-internet-happened-netscape-iphone At the frontlines in the battle for SEO is Google Search Console (GSC), an amazing tool that makes you visible in search engine results pages (SERPs) and provides an in-depth analysis of web traffic being routing to your doorstep. And it does all this for free. If your website marks your presence in cyberspace, GSC boosts viewership and increases traffic, conversions, and sales. In this guide, SEO strategists at Miromind explain how you benefit from GSC, how you integrate it with your website, and what you do with its reports to strategize the domain dominance of your brand. What is Google Search Console (GSC)?Created by Google, the Google Webmaster Tools (GWT) initially targeted webmasters. Offered by Google as a free of cost service, GWT metamorphosed into its present form, the Google Search Console (GSC). It’s the cutting edge tool widely used by an exponentially diversifying group of digital marketing professionals, web designers, app developers, SEO specialists, and business entrepreneurs. For the uninitiated, GSC tells you everything that you wish to know about your website and the people who visit it daily. For example, how much web traffic you’re attracting, what are people searching for in your site, the kind of platform (mobile, app, desktop) people are using to find you, and more importantly, what makes your site popular. Then GSC takes you on a subterranean dive to find and fix errors, design sitemaps, and check file integrity. Precisely what does Google Search Console do for you? These are the benefits.1. Search engine visibility improvesEver experienced the sinking sensation of having done everything demanded of you for creating a great website, but people who matter can’t locate you in a simple search? Search Console makes Google aware that you’re online. 2. The virtual image remains current and updatedWhen you’ve fixed broken links and coding issues, Search Console helps you update the changes in such a manner that Google’s search carries an accurate snapshot of your site minus its flaws. 3. Keywords are better optimized to attract trafficWouldn’t you agree that knowing what draws people to your website can help you shape a better user experience? Search Console opens a window to the keywords and key phrases that people frequently use to access your site. Armed with this knowledge, you can optimize the site to respond better to specific keywords. 4. Safety from cyber threatsCan you expect to grow business without adequate protection against external threats? Search Console helps you build efficient defenses against malware and spam, securing your growing business against cyber threats. 5. Content figures prominently in rich resultsIt’s not enough to merely figure in a search result. How effectively are your pages making it into Google rich results? These are the cards and snippets that carry tons of information like ratings, reviews, and just about any information that results in better user experience for people searching for you. Search console gives you a status report on how your content is figuring in rich results so you can remedy a deficit if detected. 6. Site becomes better equipped for AMP complianceYou’re probably aware that mobile friendliness has become a search engine ranking parameter. This means that the faster your pages load, the more user-friendly you’re deemed to be. The solution is to adopt accelerated mobile pages (AMP), and Search Console helpfully flags you out in the areas where you’re not compliant. 7. Backlink analysisThe backlinks, the websites that are linking back to your website give Google an indication of the popularity of your site; how worthy you are of citation. With Search Console, you get an overview of all the websites linking to you, and you get a deeper insight into what motivates and sustains your popularity. 8. The site becomes faster and more responsive to mobile usersIf searchers are abandoning your website because of slow loading speeds or any other glitch, Search Console alerts you so you can take remedial steps and become mobile-friendly. 9. Google indexing keeps pace with real-time website changesSignificant changes that you make on the website could take weeks or months to figure in the Google Search Index if you sit tight and do nothing. With search console, you can edit, change, and modify your website endlessly, and ensure the changes are indexed by Google instantaneously. By now you have a pretty good idea why Google Search Console has become the must-have tool for optimizing your website pages for improved search results. This also helps ensure that your business grows in tandem with the traffic that you’re attracting and converting. Your eight step guide on how to use Google Search Console1. How to set up your unique Google Search Console accountAssuming that you’re entirely new to GSC, your immediate priority is to add the tool and get your site verified by Google. By doing this, you’ll be ensuring that Google classifies you unambiguously as the owner of the site, whether you’re a webmaster, or merely an authorized user. This simple precaution is necessary because you’ll be privy to an incredibly rich source of information that Google wouldn’t like unauthorized users to have access to. You can use your existing Google account (or create a new one) to access Google Search Console. It helps if you’re already using Google Analytics because the same details can be used to login to GSC. Your next step is to open the console and click on “Add property”.

By adding your website URL into the adjacent box, you get an umbilical connection to the console so you can start using its incredible array of features. Take care to add the prefix “https” or “www” so Google loads the right data. 2. How to enable Google to verify your site ownership

Option oneHow to add an HTML tag to help Google verify ownershipOnce you have established your presence, Google will want to verify your site. At this stage, it helps to have some experience of working in HTML. It’ll be easier to handle the files you’re uploading; you’ll have a better appreciation of how the website’s size influences the Google crawl rate, and gain a clearer understanding of the Google programs already running on your website.

If all this sounds like rocket science, don’t fret because we’ll be hand-holding you through the process. Your next step is to open your homepage code and paste the search console provided HTML tag within the <Head> section of your site’s HTML code. The newly pasted code can coexist with any other code in the <Head> section; it’s of no consequence. An issue arises if you don’t see the <Head> section, in which case you’ll need to create the section to embed the Search Console generated code so that Google can verify your site. Save your work and come back to the homepage to view the source code; the console verification code should be clearly visible in the <Head> section confirming that you have done the embedding correctly. Your next step is to navigate back to the console dashboard and click “Verify”. At this stage, you’ll see either of two messages – A screen confirming that Google has verified the site, or pop up listing onsite errors that need to be rectified before completing verification. By following these steps, Google will be confirming your ownership of the site. It’s important to remember that once the Google Search Console code has been embedded onsite and verified, any attempt to tamper or remove the code will have the effect of undoing all the good work, leaving your site in limbo. Getting Google Search Console to verify a WordPress website using HTML tagEven if you have a WordPress site, there’s no escape from the verification protocol if you want to link the site to reap the benefits of GSC. Assuming that you’ve come through the stage of adding your site to GSC as a new property, this is what you do. The WordPress SEO plugin by Yoast is widely acknowledged to be an awesome SEO solution tailor-made for WordPress websites. Installing and activating the plugin gives you a conduit to the Google Search Console. Once Yoast is activated, open the Google Search Console verification page, and click the “Alternate methods” tab to get to the HTML tag. You’ll see a central box highlighting a meta tag with certain instructions appearing above the box. Ignore these instructions, select and copy only the code located at the end of the thread (and not the whole thread).

Now revert back to the website homepage and click through SEO>Dashboard. In the new screen, on clicking “Webmaster tools” you open the “Webmaster tools verification” window. The window displays three boxes; ensure to paste the previously copied HTML code into the Google Search Console box, and save the changes.

Now, all you have to do is revert to the Google Search Console and click “Verify” upon which the console will confirm that verification is a success. You are now ready to use GSC on your WordPress site. Option twoHow to upload an HTML file to help Google verify ownershipThis is your second verification option. Once you’re in Google Search Console, proceed from “Manage site” to “Verify this site” to locate the “HTML file upload” option. If you don’t find the option under the recommended method, try the “Other verification methods”.

Once you’re there, you’ll be prompted to download an HTML file which must be uploaded in its specified location. If you change the file in any manner, Search Console won’t be able to verify the site, so take care to maintain the integrity of the download. Once the HTML file is loaded, revert back to the console panel to verify, and once that is accomplished you’ll get a message confirming that the site is verified. After the HTML file has been uploaded, go back to Search Console and click “Verify”. If everything has been uploaded correctly, you will see a page letting you know that the site has been verified. Once again, as in the first option we’ve listed, don’t change, modify, or delete the HTML file as that’ll bring the site back to the unverified status. Option threeUsing the Google Tag Manager route for site verificationBefore you venture into the Google Search Console, you might find it useful to get the hang of Google Tag Manager (GTM). It’s a free tool that helps you manage and maneuver marketing and analytics tags on your website or app. You’ll observe that GTM doubles up as a useful tool to simplify site verification for Google Search Console. If you intend to use GTM for site verification there are two precautions you need to take; open your GTM account and enable the “View, Edit, and Manage” mode. Also, ensure that the GTM code figures adjacent to the <Body> tag in your HTML code. Once you’re done with these simple steps, revert back to GSC and follow this route – Manage site > Verify this site > Google Tag Manager. By clicking the “Verify” option in Google Tag Manager, you should get a message indicating that the site has been verified.

Once again, as in the previous options, never attempt to change the character of the GTM code on your site as that may bring the site back to its unverified position. Option fourSecuring your status as the domain name providerOnce you’re done with the HTML file tagging or uploading, Google will prompt you to verify the domain that you’ve purchased or the server where your domain is hosted, if only to prove that you are the absolute owner of the domain, and all its subdomains or directories. Open the Search Console dashboard and zero in on the “Verify this site” option under “Manage site”. You should be able to locate the “Domain name provider” option either under the “Recommended method” or the “Alternate method” tab. When you are positioned in the “Domain name provider”, you’ll be shown a listing of domain hosting sites that Google provides for easy reference.

At this stage, you have two options. If your host doesn’t show up in the list, click the “Other” tab to receive guidelines on creating a DNS TXT code aimed at your domain provider. In some instances, the DNS TXT code may not match your provider. If that mirrors your dilemma, create a DNS TXT record or CNAME code that will be customized for your provider. 3. Integrating the Google Analytics code on your siteIf you’re new to Google Analytics (GA), this is a good time to get to know this free tool. It gives you amazing feedback which adds teeth to digital marketing campaigns. At a glance, GA helps you gather and analyze key website parameters that affect your business. It tracks the number of visitors converging on your domain, the time they spend browsing your pages, and the specific keywords in your site that are most popular with incoming traffic. Most of all, GA gives you a fairly comprehensive idea of how efficiently your sales funnel is attracting leads and converting customers. The first thing you need to do is to verify whether the website has the GA tracker code inserted in the <Head> segment in the homepage HTML code. If the GA code is to carry out its tracking functions correctly, you have to ensure that the code is placed only in the <Head> segment and not elsewhere as in the <Body> segment. Back in the Google Search Console, follow the given path – Manage site > Verify this site till you come to the “Google Analytics tracking code” and follow the guidelines that are displayed. Once you get an acknowledgment that the GA code is verified, refrain from making any changes to the code to prevent the site from reverting to unverified status. Google Analytics vs. Google Search Console – Knowing the difference and appreciating the benefitsFor a newbie, both Google Analytics and Google Search Console appear like they’re focused on the same tasks and selling the same pitch, but nothing could be further from the truth. Read also: An SEO’s guide to Google Analytics GA’s unrelenting focus is on the traffic that your site is attracting. GA tells you how many people visit your site, the kind of platform or app they’re using to reach you, the geographical source of the incoming traffic, how much time each visitor spends browsing what you offer, and which are the most searched keywords on your site. If GA gives you an in-depth analysis of the efficiency (or otherwise) of your marketing campaigns and customer conversion pitch. Google Search Console then peeps under the hood of your website to show you how technically sound you are in meeting the challenges of the internet. GSC is active in providing insider information.

GSC also opens a window to manual actions, if any, issued against your site by Google for perceived non-compliance of the Webmaster guidelines. If you open the manual actions report in the Search Console message center and see a green check mark, consider yourself safe. But if there’s a listing of non-compliances, you’ll need to fix either the individual pages or sometimes the whole website and place the matter before Google for a review.

Manual actions must be looked into because failure to respond places your pages in danger of being omitted from Google’s search results. Sometimes, your site may attract manual action for no fault of yours, like a spammy backlink that violates Webmaster quality guidelines, and which you can’t remove.

In such instances, you can use the GSC “Disavow Tool” to upload a text file, listing the affected URLs, using the disavow links tool page in the console. If approved, Google will recrawl the site and reprocess the search results pages to reflect the change. Basically, GA is more invested in the kind of traffic that you’re attracting and converting, while GSC shows you how technically accomplished your site is in responding to searches, and in defining the quality of user experience. Packing power and performance by combining Google Analytics and Google Search ConsoleYou could follow the option of treating GA and GSC as two distinct sources of information and analyze the reports you access, and the world would still go on turning. But it may be pertinent to remember that both tools present information in vastly different formats even in areas where they overlap. It follows that integrating both tools presents you with additional analytical reports that you’d otherwise be missing; reports that trudge the extra mile in giving you the kind of design and marketing inputs that lay the perfect foundation for great marketing strategies. Assuming you’re convinced of the need for combining GA and GSC, this is what you do. Open the Google Search Console, navigate to the hub-wheel icon, and click the “Google Analytics Property” tab.

This shows you a listing of all the GA accounts that are operational in the Google account. Hit the save button on all the accounts that you’ll be focusing on, and with that small step, you’re primed to extract maximum juice from the excellent analytical reporting of the GA-GSC combo. Just remember to carry out this step only after the website has been verified by Google by following the steps we had outlined earlier. What should you do with Google Search Console?1. How to create and submit a sitemap to Google Search ConsoleIs it practical to hand over the keys to your home (website) to Google and expect Google to navigate the rooms (webpages) without assistance? You can help Google bots do a better job of crawling the site by submitting the site’s navigational blueprint or sitemap. The sitemap is your way of showing Google how information is organized throughout your webpages. You can also position valuable details in the metadata, information on textual content, images, videos, and podcasts, and even mention the frequency with which the page is updated. We’re not implying that a sitemap is mandatory for Google Search Console, and you’re not going to be penalized if you don’t submit the sitemap. But it is in your interests to ensure that Google has access to all the information it needs to do its job and improve your visibility in search engines, and the sitemap makes the job easier. Ultimately, it works in your favor when you’re submitting a sitemap for an extensive website with many pages and subcategories. For starters, decide which web pages you want Google bots should crawl, and then specify the canonical version of each page. What this means is that you’re telling Google to crawl the original version of any page to the exclusion of all other versions. Then create a sitemap either manually or using a third-party tool. At this stage, you have the option of adding the sitemap to the robots.txt file in your source code or link it directly to the search console. Read also: Robots.txt best practice guide + examples Assuming that you’ve taken the trouble to get the site verified by GSC, revert back to the search console, and then navigate to “Crawl” and its subcategory “Sitemaps.” On clicking “Sitemaps” you will see a field “Add a new sitemap”. Enter the URL of your sitemap in a .xml format and then click “Submit”.

With these simple steps, you’ve effectively submitted your sitemap to Google Search Console. 2. How to modify your robots.txt file so search engine bots can crawl efficientlyThere’s a file embedded in your website that doesn’t figure too frequently in SEO optimization circles. The minor tweaking of this file has major SEO boosting potential. It’s virtually a can of high-potency SEO juice that a lot of people ignore and very few open. It’s called the robots exclusion protocol or standard. If that freaks you out, we’ll keep it simple and call it the robots.txt file. Even without technical expertise, you can open your source code and you’ll find this file. The robots.txt is your website’s point of contact with search engine bots. Before tuning in on your webpages, the search bot will peep into this text file to see if there are any instructions about which pages should be crawled and which pages can be ignored (that’s why it helps to have your sitemap stored here). The bot will follow the robots exclusion protocol that your file suggests regarding which pages are allowed for crawling and which are disallowed. This is your site’s way of guiding search engines to pages that you wish to highlight and also helps in exclusion of content that you do not want to share. There’s no guarantee that robots.txt instructions will be followed by bots, because bots designed for specific jobs may react differently to the same set of instructions. Also, the system doesn’t block other websites from linking to your content even if you wouldn’t want the content indexed. Before proceeding further, please ensure that you’ve already verified the site; then open the GSC dashboard and click the “Crawl” tab to proceed to “robots.txt Tester.”

This tool enables you to do three things: